Rate limiting is an approach to limit resources that can be accessed over a time. Limiting might not sound a good word in the beginning, but we are living in a world with huge consumption rates within limited resources. And this is not so different for software solutions. So, limiting some resources may be required for reliable and secure software solutions in this era.

And also, within some business requirements, limiting resources may help organizations to get some revenue for their owned resources.

In either way, limiting resources provides some benefits. In fact, this concept is not a new thing in software solutions and Microsoft’s development stack. It could be already done with some libraries or custom implementations. But within new version of .NET 7, “RateLimiting” has been introduced as built-in for .NET developers to have easier development to protect owned resources.

Within this post; because of, we live in a big world-wide connected web, I will try to give a brief introduction for “RateLimiting” middleware in ASP.NET Core. But to make a deep dive into RateLimiting concept in .NET 7, I suggest you to check also new APIs in System.Threading.RateLimiting package. This is also the core component of RateLimiting middleware for ASP.NET Core.

Microsoft.AspNetCore.RateLimiting

Microsoft.AspNetCore.RateLimiting package provides a rate limiting middleware for an ASP.NET Core applications.

We can add this package to our web application to have some rate limiting approaches in our applications. After then to add RateLimiting middleware, we use UseRateLimiter() extension method for IApplicationBuilder (WebApplication)

app.UseRateLimiter(

new RateLimiterOptions()

{

OnRejected = (context, cancellationToken) =>

{

context.HttpContext.Response.StatusCode = StatusCodes.Status429TooManyRequests;

context.Lease.GetAllMetadata().ToList()

.ForEach(m => app.Logger.LogWarning($"Rate limit exceeded: {m.Key} {m.Value}"));

return new ValueTask();

},

RejectionStatusCode = StatusCodes.Status429TooManyRequests

}

);

Within this method it is possible to have some option to have control over RateLimiting, such as; setting HTTP status code and implementing some custom actions when rate limit is occurred and also some other limiters(a.k.a Rate limiting algorithms)

For example, the above example demonstrates setting HTTP429 as response code and a delegate to do some logging with some metadata when rate limiting is occurred.

HTTP 429 is default status code for too many requests. So, it is crucial to use this status code for the sake of pandas’ health and planet. Let’s expand our awareness within HTTP codes.🐼

And then we need to add some limiters to our rate limiting option so that we can define rate limiting algorithms. There are some built-in rate limiting algorithms provided by .NET 7. And these are provided as extension methods for RateLimiterOptions.

app.UseRateLimiter(

new RateLimiterOptions()

{

OnRejected = (context, cancellationToken) =>

{

context.HttpContext.Response.StatusCode = StatusCodes.Status429TooManyRequests;

context.Lease.GetAllMetadata().ToList()

.ForEach(m => app.Logger.LogWarning($"Rate limit exceeded: {m.Key} {m.Value}"));

return new ValueTask();

},

RejectionStatusCode = StatusCodes.Status429TooManyRequests

}

.AddConcurrencyLimiter("controllers"

, new ConcurrencyLimiterOptions(1

, QueueProcessingOrder.NewestFirst

, 0))

);

Within above limiter, we add a simple concurrency limiter. .AddConcurrencyLimiter() basically limits the concurrency requests to access a resource. Because of this is just a simple demonstration; in here, we have just 1 request permit and 0 queue limit, so other requests cannot access the resource at a time.

As you can notice, we give a name to this limiter as “controllers”. As a result, we can assign this limiter to any route endpoints with this name with .RequireRateLimiting() method.

app.MapGet("/hello", context =>

{

context.Response.StatusCode = StatusCodes.Status200OK;

return context.Response.WriteAsync("Hello World!");

}).RequireRateLimiting("controllers");

// OR

app.MapControllers().RequireRateLimiting("controllers");

Other limiters

There are some other limiters provided for RateLimiterOptions. Basically, these provides some approached some other needed requirements. For example, with .AddTokenBucketLimiter(), it is possible to limit resources with a consumption token. Let’s say that you have a bucket with 5 tokens and every request gets one token out from the bucket, if you don’t have any token in the bucket then the request cannot access a resource until bucket is refilled with tokens. I am not going to dive deeper but just know that some rate limiting algorithms are provided with .NET 7. Also check, .AddFixedWindowLimiter() and .AddSlidingWindowLimiter().

To have some control to choose which rate limiting algorithm to be applied according to some business requirements, we can use .AddPolicy() extension method.

And with .AddNoLimiter(), it is possible to bypass rate-limiting for an endpoint. Check below snippet for the main idea.

app.UseRateLimiter(

new RateLimiterOptions()

{

OnRejected = (context, cancellationToken) =>

{

context.HttpContext.Response.StatusCode = StatusCodes.Status429TooManyRequests;

context.Lease.GetAllMetadata().ToList()

.ForEach(m => app.Logger.LogWarning($"Rate limit exceeded: {m.Key} {m.Value}"));

return new ValueTask();

},

RejectionStatusCode = StatusCodes.Status429TooManyRequests

}

.AddConcurrencyLimiter("controllers"

, new ConcurrencyLimiterOptions(1

, QueueProcessingOrder.NewestFirst

, 0))

.AddTokenBucketLimiter("token"

, new TokenBucketRateLimiterOptions(tokenLimit: 5

, queueProcessingOrder: QueueProcessingOrder.NewestFirst

, queueLimit: 0

, replenishmentPeriod: TimeSpan.FromSeconds(5)

, tokensPerPeriod: 1

, autoReplenishment: false))

.AddPolicy(policyName: "token-policy", partitioner: httpContext =>

{

//Check if the request has any query string parameters.

if (httpContext.Request.QueryString.HasValue)

{

//If yes, let's don't have a rate limiting policy for this request.

return RateLimitPartition.CreateNoLimiter<string>("free");

}

else

{

//If no, let's have a rate limiting policy for this request.

return RateLimitPartition.CreateTokenBucketLimiter("token", key =>

new TokenBucketRateLimiterOptions(tokenLimit: 5

, queueProcessingOrder: QueueProcessingOrder.NewestFirst

, queueLimit: 0

, replenishmentPeriod: TimeSpan.FromSeconds(5)

, tokensPerPeriod: 1

, autoReplenishment: false));

}

})

.AddNoLimiter("free")

);

app.MapGet("/free", context =>

{

context.Response.StatusCode = StatusCodes.Status200OK;

return context.Response.WriteAsync("All free to call!");

}).RequireRateLimiting("free");

app.MapGet("/hello", context =>

{

context.Response.StatusCode = StatusCodes.Status200OK;

return context.Response.WriteAsync("Hello World!");

}).RequireRateLimiting("token");

app.MapGet("/token", context =>

{

context.Response.StatusCode = StatusCodes.Status200OK;

return context.Response.WriteAsync("Token limited to 5 per 5 seconds!");

}).RequireRateLimiting("token-policy");

app.MapControllers().RequireRateLimiting("controllers");

Let’s see some action

To have a more solid understanding, we can use some load-testing kind of tools to make requests. With some loads and concurrency, we can see and debug our code and understand what is going on. I have used bombardier to see concurrency limiter’s action. (bombardier is a very simple benchmark tool for HTTP requests.)

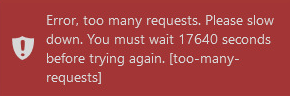

So, when we execute simple bombardier command, we are going to notice HTTP 429 status code and the logs from our application as below.

./bombardier -c 4 -n 4 http://localhost:5213/weatherforecast

Bombarding http://localhost:5213/weatherforecast with 4 request(s) using 4 connection(s)

4 / 4 [=======================================================] 100.00% 19/s 0s

Done!

Statistics Avg Stdev Max

Reqs/sec 1237.40 0.00 1237.40

Latency 2.93ms 58.29us 3.03ms

HTTP codes:

1xx - 0, 2xx - 1, 3xx - 0, 4xx - 3, 5xx - 0

others - 0

Throughput: 355.66KB/s

And as you can remember from OnRejected delegate in RateLimiterOptions, we can have some metadata about rate limiting reason.

warn: LimitedAPI[0]

Rate limit exceeded: REASON_PHRASE Queue limit reached

warn: LimitedAPI[0]

Rate limit exceeded: REASON_PHRASE Queue limit reached

warn: LimitedAPI[0]

Rate limit exceeded: REASON_PHRASE Queue limit reached

And also, we can check some other endpoint from browser to check other limiter. From the above code snippets, you can remember that /hello endpoint has another limiter. So, we can try to access the endpoint from a web browser and check what is happening with lots of refresh action.

And when you check to console logs, we will notice rate limit info.

warn: LimitedAPI[0]

Rate limit exceeded: RETRY_AFTER 00:00:05

I hope this brief introduction gives some clues about new rate limiting concept in .NET 7. I think that this is one of the most important new APIs in .NET 7.

Please feel free to share your feedback about the post and your comments about this new .NET 7 APIs.

At the time of writing this post, current .NET 7 version is Preview-7. So, please be aware of any upcoming changes until the RTM release.

You can check to this GitHub repository for the example mentioned in this post.